DNN-SAM: Split-and-Merge DNN Execution for Real-Time Object Detection

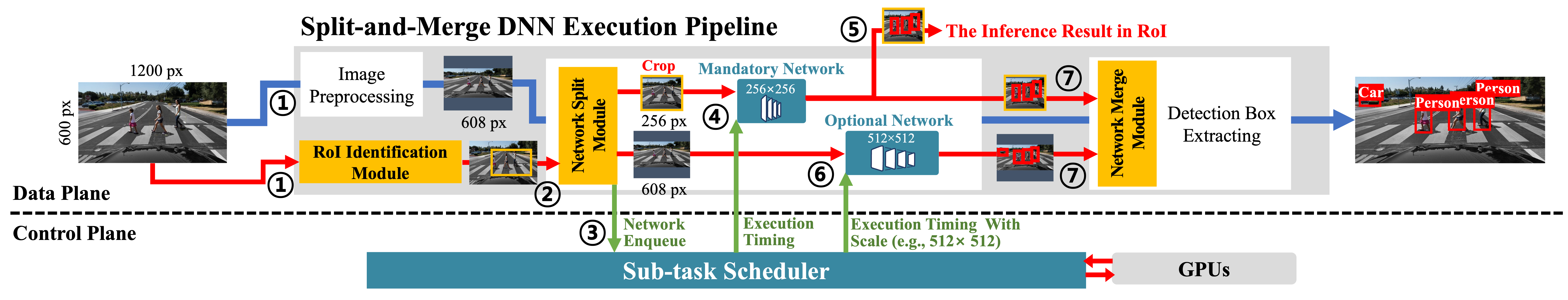

As real-time object detection systems such as autonomous cars need to process input images from multiple cameras and perform ML-based inferencing in real-time, they face significant challenges in delivering accurate and timely inferences. To meet this need, we aim to provide different levels of detection accuracy and timeliness to different portions with different criticality levels within each input image. We develop DNN-SAM, a dynamic Split-And-Merged DNN (deep neural network) execution and scheduling framework that enables seamless Split-And-Merged DNN execution for unmodified DNN models. Instead of processing an entire input image once in a full DNN model, DNN-SAM first splits a DNN inference task into two smaller sub-tasks—a mandatory sub-task dedicated to a safety-critical (cropped) portion of each image and an optional sub-task for processing a down-scaled image—then executes them independently, and finally merges their results into a complete one. To achieve timely and accurate detection for DNN-SAM, we also develop two scheduling algorithms that prioritize sub-tasks according to their criticality levels and adaptively adjust the scale of the input image to meet the timing constraints while minimizing the response time of mandatory sub-tasks or maximizing the accuracy of optional sub-tasks. We have implemented and evaluated DNN-SAM on a representative ML framework. Our evaluation shows DNN-SAM to improve detection accuracy in the safety-critical region by 2.0–3.7× and lower average inference latency by 4.8–9.7× over existing approaches without violating any timing constraints.

Case study: Emergency braking on 1/10 scale self-driving car

In this experiment, a car is moving toward the pedestrian at 0.8?∕?, and the camera’s field-of-view is limited to 1.5?, i.e., the required braking distance is limited to that range. Fig. 8 shows the average stopping distances (plotted as a bar) with a breakdown of the distance up to the detection point (perception-reaction) and the actual braking distance (braking) as well as the maximum/minimum distances (error bar) out of 15 trials with and without using DNN-SAM. With Baseline, a stopping decision is made at the 1.2? spot and stops at the 1.7? spot on average, thus exceeding the safety distance. The worst-case stopping distance is 2.1? which is far beyond the safety distance. In contrast, DNN-SAM makes a stopping decision at the 0.7? spot and stops at the 1.0? spot on average within the safety distance. The distance up to the detection is reduced by 1.9× compared to Baseline. The worst-case stopping distance is 1.3? still within the safety distance, demonstrating enhanced safety and quality of control in a real-world environment.