The goal of this paper is to provide worst-case timing guarantees for real-time I/O requests, while fully utilizing the potential bandwidth for non real-time I/O requests in NAND flash storage systems. We identify a trade-off between flash chip sharing and I/O workload isolation in terms of timing guarantees and bandwidth. By taking such a trade-off into account, we propose a new real-time I/O scheduling framework that enables dynamic isolation between real-time I/O requests to meet all timing constraints and co-scheduling of real-time and non real- time I/O requests to provide high bandwidth utilization. Our in-depth evaluation results show that the proposed approach outperforms existing isolation approaches significantly in terms of both schedulability and bandwidth.

Motivation

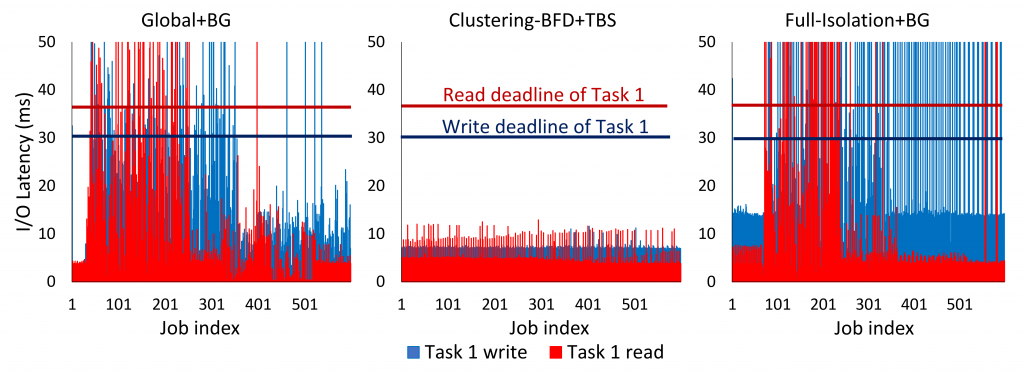

We illustrate a measurement-based case study to motivate our approach. We use an emulated SSD drive. Via this case study, we examine the effect of different levels of flash chip isolation on the worst-case latency of an real-time I/O request. We first compare two simple approaches for chip isolation over m chips: shared (i.e. no isolation) and fully-isolated resources. Under the shared approach, all I/O requests are allowed to read/write data across m chips as existing SSDs do. Under the full-isolation approach, each I/O request is statically assigned to a single chip and is allowed to read/write data on that chip only. Figure (a) and (c) show the worst-case latency of an real-time I/O request under the shared and fully-isolated approaches, respectively. Under the shared approach, GC is triggered less frequently than the fully-isolated approach because free space available in all the chips can be utilized by all the tasks. However, each task suffers from high GC interference by other tasks, which results in the violation of its timing constraints. Under the fully-isolated approach, there is no GC interference of other I/O requests. However, it suffers from high GC overheads to reclaim free pages since free pages in other chips cannot be utilized. This results in violating its timing constraints. To overcome disadvantages of both approaches, we consider an- other approach, called cluster-based isolation, using a notion of (chip) cluster. Fig. 1b shows the worst-case latency of an real-time I/O request under the cluster-based approach. By trading-off between resource contention by sharing chips and garbage collection overhead by isolating chips, we can achieve a lower worst-case latency than both the shared and fully-isolated approaches, satisfying the timing constraint.

Case study : DRAM-emulated SSD

we implement the proposed techniques in the Linux operating system with a DRAM-emulated SSD that models a real-world SSD. We then carry out a case study using RT tasks. Our SSD has four NAND flash chips which are configured with the parameters shown in Table I. Fig. 4 shows the experimental results. We consider four RT tasks and one non-RT. Under Clustering-BFD+TBS, no deadline is missed for RT tasks, while a considerable amount of read and write requests for RT tasks miss deadlines under Global+BG and Full-Isolation+BG.5 For the average bandwidth of the non-RT job, Clustering-BFD+TBS achieves 950 (IOPS), while Global+BG and Full-Isolation+BG show 345 and 202 (IOPS), respectively.

Publication

“Dynamic Chip Clustering and Task Allocation for Real-time Flash”

Gyeongtaek Kim, Sungjin Lee, Hoon Sung Chwa

In 58th Design Automation Conference (DAC 2021), San Francisco, December 5-9, 2021