RT-Swap: Addressing GPU Memory Bottlenecks for Real-Time Multi-DNN Inference

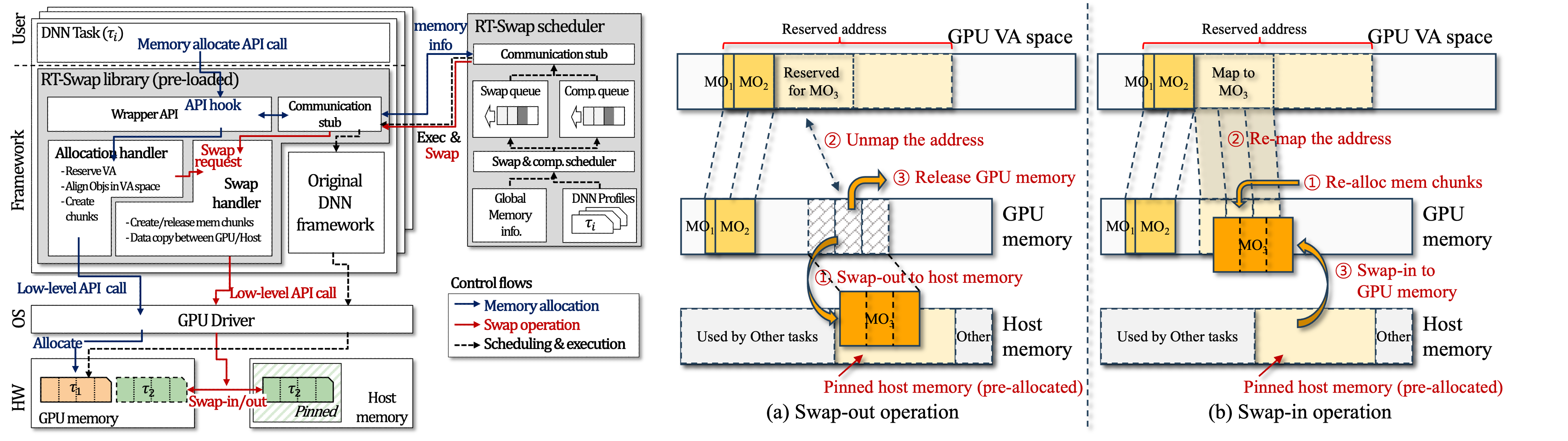

The increasing complexity and memory demands of Deep Neural Networks (DNNs) for real-time systems pose new significant challenges, one of which is the GPU memory capacity bottleneck, where the limited physical memory inside GPUs impedes the deployment of sophisticated DNN models. This paper presents, to the best of our knowledge, the first study of addressing the GPU memory bottleneck issues, while simultaneously ensuring the timely inference of multiple DNN tasks. We propose RT-Swap, a real-time memory management framework that enables transparent and efficient swap scheduling of memory objects, employing the relatively larger CPU memory to extend the available GPU memory capacity, without compromising timing guarantees. We have implemented RTSwap on top of representative machine-learning frameworks, demonstrating its effectiveness in making significantly more DNN task sets schedulable at least 72% over existing approaches even when the task sets demand up to 96.2% more memory than the GPU’s physical capacity.